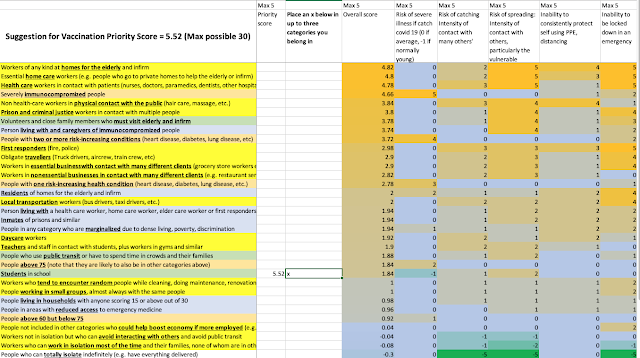

I have created a spreadsheet in Google Sheets to suggest a prioritization scheme for Covid-19 vaccinations. People with the highest score in this scheme ought, I think, to be vaccinated first.

If you have a Google account, you can click here to make a copy of the spreadsheet in your account. Or if you have Excel, you can download a copy here. The spreadsheet is called VaccinationPriority.xlsx

In your copy, place an x in column C of any of the rows corresponding to categories of risk factors that apply to you. Your suggested vaccination priority will appear in cell A2 (a value up to 30).

Please note: This is not medical advice, and public health authorities will want to set up their own schemes. The idea of this spreadsheet is simply to present one scheme for how these priorities could be set. In fact, a website with calculations like this could be used to actually schedule people into waves at vaccination clinics.

The image of the spreadsheet (below) shows how this works.

The risk categories (rows) include type of work (background yellow), health status (background light orange), living situation (background light blue), and places you go other than for work (background light green). Up to three categories (the most risky ones that apply to you) are used to calculate your score.

Columns E through I show five risk factors (with values up to 5) for each of the risk categories. These factors are the following:

- Column E: Risk of severe illness if you catch Covid-19. This mostly applies to people with risk categories relating to their health. Immunocompromised peope are given a risk level of 5, followed by people with multiple specific diseases such as heart disease and diabetes. Being elderly is a factor here too, but is weighted less.

- Column F: Risk of catching Covid-19 because your risk categories tend to put you in contact with other people (perhaps high risk people) and perhaps for extended times in transmission-prone environments. Health care workers, first responders and those whose work puts them in direct and close contact with people (e.g. hairdressers) are given the highest risk factor here. But the risks are only set at a maximum of 3 since PPE can likely mitigate the risk if used properly.

- Column G: Risk of spreading Covid-19 to others if you do catch it and are in contact with others before diagnosis. Home care workers, workers at care homes, and health care workers are given considered the highest risk here. Whereas vaccination due to risks in columns E and F is primarily to protect the person vaccinated, Column G is all about protecting others.

- Column H: Risk due to being potentially unable to protect yourself sufficently using PPE. Home care workers and people like hairdressers have the highest risk here.

The score for any of the risk categories (rows) is determined 90% from the maximum score in the above four columns.

- Column I: Feasibility of being fully locked down in an emergency. If Covid-19 surges where you are, can you simply eliminate the risk factor? Some kinds of workers have been and will likely continue to work at home, or could start to do so. Risk factors relating to illnesses have non-zero values here because they may need ongoing medical attention. I have given this only 10% of the weight in the row (risk category).

Note that there are some risk factors and risk scores that are negative: For example whereas most risk categories are scored as zero for risk of severe illness, I have marked students as -1 for this factor as most of them are young. Similarly, people who can isolate a lot can have negative values for risk of catching and risk of spreading. These negative values can counteract positive values, such as being elderly.

Your ultimate score is calculated as 3 times your top risk category score, plus 2 times your second risk category score (if you have one) plus your third risk category score. The highest possible value, with an x in cells C3, C4 and C5 (a care home medical worker who also gives care at private homes) would be 28.84.

My score is zero. I actually have no risk categories! I can work in isolation most of the time, but I do have children in school, so I do not fit rows 33 or 34. My kids, who are students, would have risk factors of 5.52, which is quite low, but higher than my score. But they should be vaccinated before me as a result. This is illustrated in the image below. If the University resumes in-person classes, then as a teacher I would have a score of 5.7. I am not visiting my elderly father currently (and haven't since the pandemic started), but if I needed to on a regular basis, while also teaching, then my score would be 15.14. My father, who is over 75 but can isolate would have a score of 5.52.

This spreadsheet can be used to help Boards of Health decide on vaccination priority. The basic idea is that vaccination should be prioritized for those with higher risk categories, and that multiple risk categories should be combined (but not additively).

The following is a closer look at the risk categories, from greatest to lowest, and their calculated scores. Your score would be three times the one highest in the list, plus 2 times the second one in the list, plus the value of the one third on the list.

| Workers of any kind at homes for the elderly and infirm | 4.82 |

| Essential home care workers (e.g. people who go to private homes to help the elderly or infirm) | 4.8 |

| Health care workers in contact with patients (nurses, doctors, paramedics, dentists, other hospital/clinic workers) | 4.78 |

| Severely immunocompromized people | 4.66 |

| Non health-care workers in physical contact with the public (hair care, massage, etc.) | 3.84 |

| Prison and criminal justice workers in contact with multiple people | 3.8 |

| Volunteers and close family members who must visit elderly and infirm | 3.78 |

| Person living with and caregivers of immunocompromized people | 3.74 |

| People with two or more risk-increasing conditions (heart disease, diabetes, lung disease, etc) | 3.72 |

| First responders (fire, police) | 2.98 |

| Obligate travellers (Truck drivers, aircrew, train crew, etc) | 2.9 |

| Workers in essential businesswith contact with many different clients(grocery store workers etc.) | 2.9 |

| Workers in nonessential businesses in contact with many different clients(e.g. restaurant servers) | 2.82 |

| People with one risk-increasing health condition (heart disease, diabetes, lung disease, etc.) | 2.78 |

| Residents of homes for the elderly and infirm | 2 |

| Local transportation workers (bus drivers, taxi drivers, etc.) | 2 |

| Person living with a health care worker, home care worker, elder care worker or first responders | 1.94 |

| Inmates of prisons and similar | 1.94 |

| People in any category who are marginalized due to dense living, poverty, discrimination | 1.94 |

| Daycare workers | 1.92 |

| Teachers and staff in contact with students, plus workers in gyms and similar | 1.9 |

| People who use public transit or have to spend time in crowds and their families | 1.88 |

| People above 75 (note that they are likely to also be in other categories above) | 1.84 |

| Students in school | 1.84 |

| Workers who tend to encounter random people while cleaning, doing maintenance, renovations, etc. | 1 |

| People working in small groups, almost always with the same people | 1 |

| People living in households with anyone scoring 15 or above out of 30 | 0.98 |

| People in areas with reduced access to emergency medicine | 0.96 |

| People above 60 but below 75 | 0.92 |

| People not included in other categories who could help boost economy if more employed (e.g. allowing businesses to open more fully) | 0.04 |

| Workers not in isolation but who can avoid interacting with others and avoid public transit | -0.04 |

| Workers who can work in isolation most of the time and their families, none of whom are in other categories | -0.08 |

| People who can totally isolate indefinitely (e.g. have everything delivered) | -0.3 |